Why Don't We Talk Anymore?, Rhiannon + Protection Spells

Nice N Sleazy, 421 Sauchiehall St, Glasgow 11:00 PM Sep 24 2023

Why Don't We Talk Anymore?

On-going Mix Series & World Building Practice Fall 2023

Why Don't We Talk Anymore? is an open-format club-night organized by myself and Rhiannon Sían Clucas first put on at Nice N Sleazy in Glasgow Scotland. The concept was based on Rhiannon's own artistic practice. I won't go into the details of her research here, but her developed visual language centered on the derelict phone booths scattered around Glasgow, as a metaphor for growing alienation. We felt that a club-night unrestrained from genre could be the site for physical connection. We played Ambient, Bass, Techno, Hard-House, Pop Edits and Hardcore. Rhiannon's scans of old burner phones served as the basis for most of the visuals.

We are currently planning to continue the mixes as an international livestream and hope to open up to a wider variety of artists.

Cosmolin

Game Structures as Frameworks for Musical Expression Summer 2023

The Cosmolin was the creative result of my Master's research and was developed in August 2023. I have done a significant amount of writing on the topic of games as frameworks for musical expression and I will be adding this writing serially to this page as I will be revising it with fresh eyes. The first iteration was a single composition and proof-of-concept which was shown in Septermber 2023. I am currently optimizing and porting the project from Unity to the open source engine Godot for a possible wider release. The abstract for the project is below:

This project explores the musical possibility of games beyond experiencing soundscapes, or imitating musical performance. The role of the designer and game system as both obstacles and motivators, opens up co-creative possibilities through the play of video games.

It presents the game as an inherently resistive interactive system which refutes the ideal of the frictionless interface. The game can act as musical Instrument and score through which the human performer, game system, and developer enter into creative and emergent a/synchronous dialogue. Games as instruments of play define their own economy and ecology through which certain sounds, and gestures are afforded.

It identifies strategies for further investigation through the design and development of a sound-based game.

Membranes W/ Ellen Crofton @ Traumberg Festival

Schloss Dornburg an der Elbe, Lindenweg 2, 39264 Gommern, Germany Jul 27-30 2023

Yellow Crane Tower @ Live in the Lane

La Chunky Studio, 1103 Argyle St, Glasgow G3 8ND 2:00 PM Jul 24 2023

Max Bowen @ Vincent Jäger's Frei Interpreten Album Release

The Old Hairdressers, Renfield Ln, Glasgow G2 5AR 7:00 PM July 17 2023

Projections

Audio-Reactive / Non-Reactive Live Video Pieces for Musical Performances and VJing Ongoing

Aural Altars

Ambisonic Particle Synth Spring 2023

A few lines from Mark Kerrins’s Beyond Dolby (Stereo): Cinema in the Digital Sound Age have always stuck out to me because they highlight an reservation I have about the current applications of VR and spatial audio as platforms for immersive experiences. Referring to the sweet-spot imposed on the audience in digital surround sound, He says:

The digital surround style ultimately works to immerse the audience in the movie’s diegesis. This illusion, however, only works for stationary filmgoers. If a spectator moves, what had seemed to be a coherent, complete environment is suddenly revealed as an artificial representation clearly composed of multiple independent sources. (Kerrins, 281)

If this holds for surround sound and the ultra-field in cinema, we might assume the same is true for higher-order spatial audio and VR (if not more so.) Companies investing in VR for video games will claim that it’s more immersive than playing on a television screen, but I’m not sure that the immersion created by a VR headset is entirely desirable given its current restrictions and the commercial applications it is being proposed for. The same I think could apply to HOA speaker setups for installations or video, which have a small, ideal listening position for the audience. Kerrins goes on to write:

The impression of real-ity is destroyed by movement. This illusion is shattered, the spectator becomes consciously aware of both the space of the theater and the constructed nature of the film. (Kerrins, 282)

The loss of immersion is a much harder fall for Ambisonics and VR than it is for sitting in front of the TV or reading a book. The less bodily restrictions you place on the consumer, and the more the work can fit into the lived world of the audience, the more resilient I believe the work will be to cracks in the immersion that will inevitably crop up.

Putting aside Ambisonics’ benefits regarding portability and the future- proofing of audio for different speaker orientations, I struggled with how to best contextualize the singular audience member these smaller HOA setups impose. In a live context, this restriction would put the audience and the performer at odds; the performer cannot react in real-time since they and the audience cannot both simultaneously inhabit the sweet-spot. In the Sound :ab, only one person can occupy the sweet spot. When the creator takes that spot, they become the audience of one. So I propose a TOA instrument not intended for galleries but as a meditative personal ritual.

I’ve been reading Curtis Rhodes’s Microsound on and off for several months and it’s helped me solidify and identify a theme in my own work, which often deals with sound grains and objects(either hand placed or systematically generated.) The former, of course, is deeply un-gestural and I spend an immense amount of labor positioning groups of very small sounds. The latter approach is more like painting, where inherent textures (unique to every brush) are generated with even simple strokes. Rhodes writes:

Cloud textures suggest a different approach to musical organization. In contrast to the combinatorial sequences of traditional mesh structure, clouds encourage a process of statistical evolution. (Rhodes, 2001 p.15)

A cloud-based organization of sound objects— governed by a larger particle system— fundamentally changes how a creator must approach a composition. The process of creating requires working in concert with a stochastic “black-box”, understood only in the way it audibly or visually reveals glimpses of its inner-workings to the user. When I work on my own music, I tend not to actually pan much, instead relying on the inherent stereo width of the synths or samples I use, maybe wrangling them in but rarely ever manually moving them around. Working in DEAR VR, or moving objects by hand in only 2-dimensions at a time felt unmusical in a way. Surgical tools are needed for certain movements of course, but I also want to paint in spatial audio, allowing the system of particles to inform the gestures that I make. The instrument is in a feedback loop with user. I suggest a move to it, and it suggests to me the next move.

It was important to this experiment that the sound only be changed by the movement and position of the particles. The controls serve only to guide and manipulate the behavior, only indirectly altering the sound. The important gestures I wanted to facilitate were variations in speed, spinning direction and separation. I felt that, by correctly tuning the the parameters these would create readable changes in the visuals and ambisonic field.

What I created stands as a platform for more gestural control surfaces for ambisonics. Of course, when seeking verisimilitude this might not be desirable, but this particle system is fundamentally something that would have been too labor intensive to replicate natively in a DAW or plugin. I had originally imagined drones, but sustained sounds do not seem to spatialize as well as sounds that more noticeably change over short period of time. I added a bed of sounds recorded in Kelvingrove park to add a kind of static reference sound underneath the particles. I did not have access to a ambisonic microphones so I took 5 recordings facing in all cardinal directions plus up and then used an ambisonic panner pan them accordingly.

I am neither a graphics or physics programmer, so my particle system is fairly rudimentary, but I’ve structured the patch so that it can be easily updated in the future to explore more gestures for moving clouds of sound objects through space simply by changing the code in the jit.gen @title update patcher. Federico Fodararo has a good a tutorial series on CPU-based particle systems in Jitter which I referenced to control ICST’s ambisonics Max package.

Mono

Etched Vinyl Synth Spring 2023

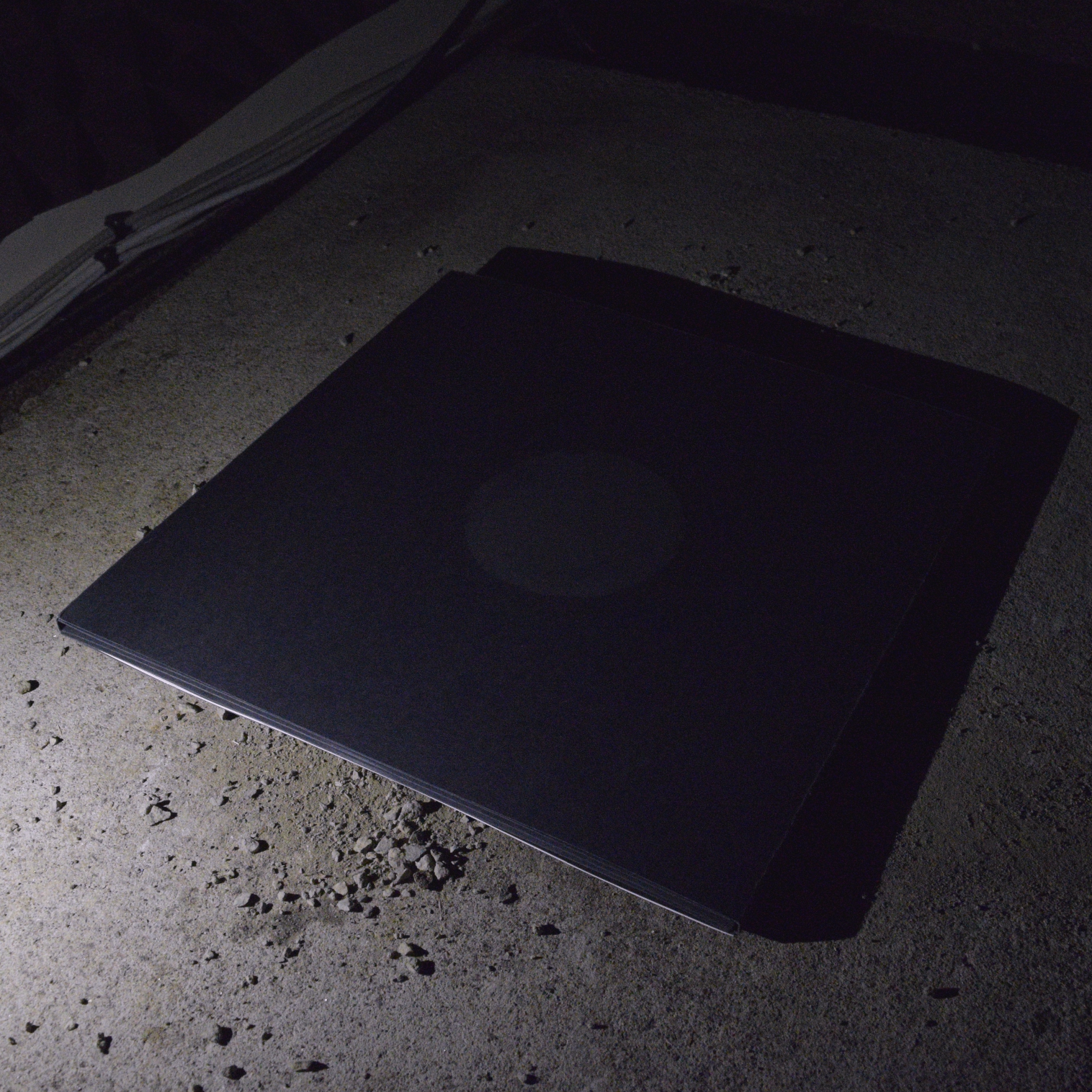

I have been thinking a lot about artificial scarcity recently. There is a constant rumbling of crypto and NFTs under the surface of experimental music right now, which state the value of the work is in its scarcity, and that the only way to maintain music's (or any other digital art’s) value is to make the platforms enforce scarcity rather than consider the different forms in which the work can only exist in a limited number or on specific platforms.

I had a conversation with a collaborator recently about YouTube, which he believed would devalue his music. I prickled at this suggestion having grown up digging endlessly through musicians back-catalogs late into the night. Like MTV before it, YouTube became the source for music videos, and directly influenced the expression of my art. I view image, performance, design and sound as integral parts of the music package. If music was viewed separate from its existence within a larger context— sound on disc— of course infinite portability and replicability would be a threat.

Vinyl publication is truly scarce on a physical level, with some runs being only a couple hundred records. Like mixtapes, they work well as an art object to passed around and disseminated in a particular locale. That’s not to position analog and/or physical media above internet or digital versions of the same work. I have benefited from this ecosystem ever since I started to create sound, and it has informed my listening habits, and sampling practice. In fact I view Mono as a celebration of the formal differences of each storage media. With the release of an upcoming album, I have been considering how to add value to my work on each platform it exists on that is native to that platform.

I’ve also been inspired by vinyl’s uniquely tactile nature. Other physical storage media for sound such as music boxes, wax cylinders, and punchcards do not maintain momentum like a record on a direct-drive turntable. This, I believe, makes the vinyl record an inherently musical storage device that can be played like an instrument. I hope to perform this work with live sampling/looping and effects processing, with a video record of the performance and the 3 discs living on as secondary record— a tool for future performances that can be passed on to other artists.

Aside from the materials used, Mono was made entirely by hand with only a ruler, craft knife, and a tungsten carbide scribe. The anti-skate records had to be sourced from eBay in a small lot, therefore the economic and environmental viability of the project long-term is called into question. I intend to make these on-demand by hand following the patterns I etched on the first set of records as long as I have vinyl blanks available, after which the project will be on hiatus.

The full text of the four-page booklet accompanying Mono is below:

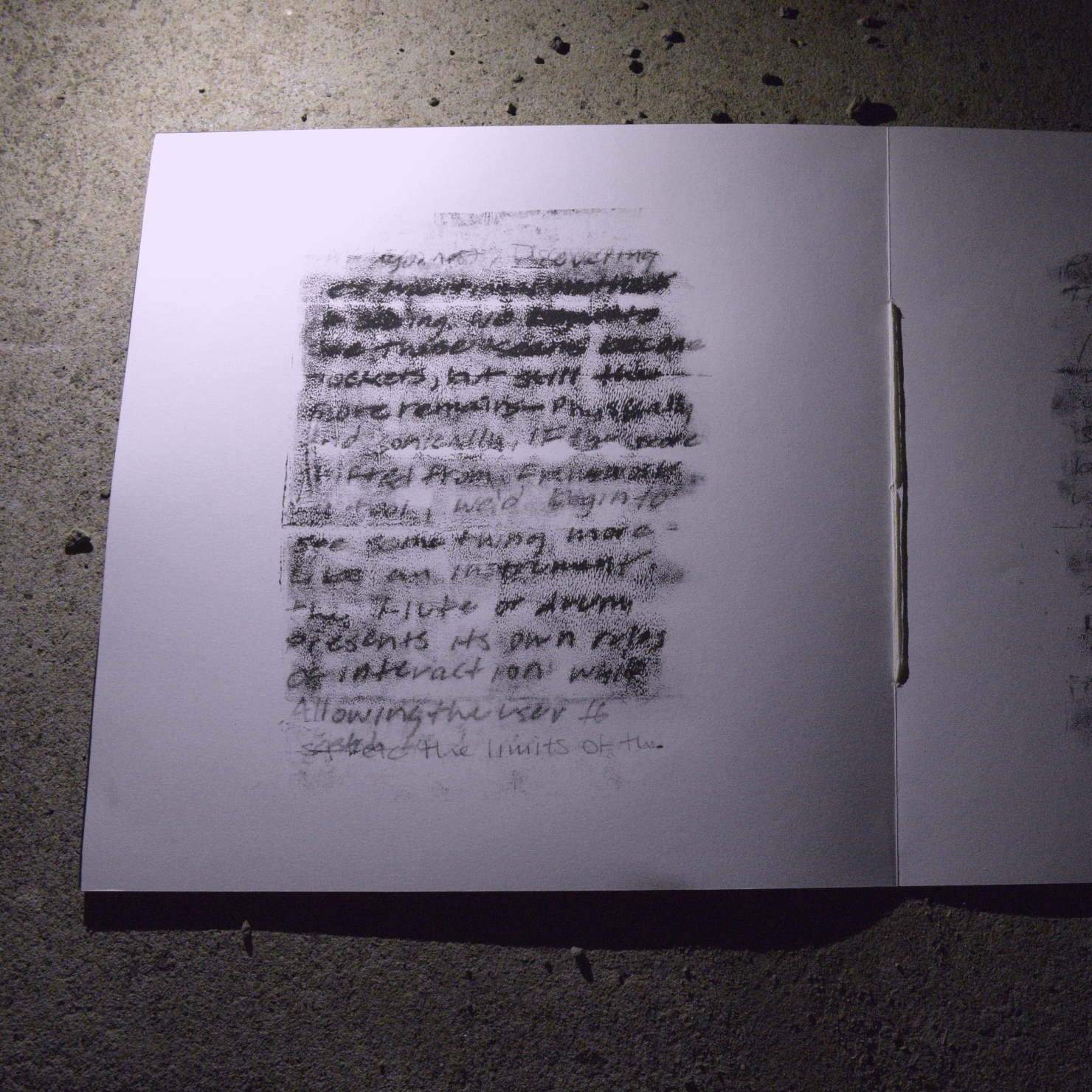

Mono

The score dictates the structure of performance. Rules which define the realization

of the sound become frameworks of expression. In traditional engraving, emphasis is

placed on the readability and replication. Given careful design for ease of reading

and the understandable restrictions of written instructions for musicality, seams

begin to open through which the performer can emerge, establishing themselves as

either collaborator or antagonist. Discovering less traditional methods of scoring,

we began to see these seams become pockets, but still the score remains— physically

and sonically. If the score shifted from framework to tool, we’d begin to see

something more like an instrument. The flute or drum presents its own rules of

interaction while allowing the user to stretch the limits of the pocket with altered

techniques. Vinyl lives on as the most accessible and portable physical storage media.

It can be written to by the user with simple tools It’s also radial, meaning beats and

loops can be easily created. Digital and magnetic storage media allow for relatively

easy and accurate replication but by engraving by hand, time and craft are reintroduced

to recorded media. The luthier can experiment and tweak the sound and playability

with each instrument they create. They create an archeology of their instruments.

Membranes W/ Ellen Crofton & Felicity White

French Street Studios, 103 French St, Glasgow G40 4EH 7:00 PM Mar 17 2023

Alight

Generative Montage Film Winter 2022

This winter, I hear the sound of songbirds when I wake up in the middle of the night. Coming from America, this is a completely new and surreal experience for me. Soundscapes I would associate with late mornings in springtime have flapped their way into my hazy recollections of stumbling to the bathroom during the dead hours of a cold, drizzly, January night.

My entire rhythm feels off recently; my internal clock is set to the changing of the seasons, but with the mildness of the Glasgow winter I find few temporal landmarks to hold on to. The blackbird's song flits around at the edges of my consciousness, like a dream; it’s too early for anyone to be up, and it’s not time for the daffodils to sprout through the last patches of snow. Noise pollution and light pollution have undoubtedly effected the patterns of living for both humans and non-humans. The clouds hanging low in the sky reflect the city in a strange way, lighting up the sky at night, bright stars set against a black sky give way to the murky gradients of browns and purples, it reminded me of a quote from William Gibson’s Neuromancer “[The sky] was the color of television, tuned to a dead channel.”

I have treated the concept of “clips” a little differently for this project. First, the slowly oscillating gradients have been generated live in Max, creating indiscernibly shifting patterns within a cloud of graininess. These gradients are selected from a list of hand- designed presets. Second, I’ve filmed the interference patterns of a light shining through water projected against a wall. The water itself is a highly complex system and the smallest of movements send giant ripples and unpredictable after-images across the screen. These water caustics are just one long video made up of several clips strung together, constantly playing in the background but randomly being brought to the foreground using linear envelopes and transparency— as if to wing itself out of the darkness and disappear before you know what you’ve seen.

The sound of blackbirds and nightingales are created from randomly selecting a clip to play at a specific volume and with certain filter characteristics, this creates a better illusion of space, and makes the repeat clips a little less noticeable with the added variation. The specifc clip is chosen at random selecting every clip at least once before it can be selected again. The clips are triggered by an impulse train object, whose tempo oscillates between several triggers per second to one every ten seconds. This creates a natural ebb and flow of intensity. Since the clips generally have different rhythms to them and begin at different times, the effect is still natural and doesn’t sound sequenced. To add subtle variations, I transition between random sets of parameters roughly with each cut to a new image.

I have been fascinated in the application of noise throughout out all my projects for its psycho-acoustic and psycho-visual affects. When filtered and modulated correctly, noise sources are incredibly versatile and can take on any source we prescribe to them. Noises interaction with the sounds around it are complex and our brains— trying to make sense of the noise— begin to attribute the subtle variations to different imagined sources. Just while creating this piece, I began to hear the sound of the freeway, the muffled sound of wind and the rumble of a truck going past. The grain in the video is completely faked too but serves the same purpose; it can produce odd figures in the dark as the mind tries to reconcile the lack of information. I like to play with how information peeks out through entropy like this. Above the cacophony of city life, the birds have adapted to the changes in climate and the changes in the urban soundscape to announce themselves through my nightly dreams.

Overall there isn’t that much complexity in the models of chance working here to select the clips, but the pacing and fine-tuning of parameters was key to achieving a natural, dream-like feel to the visuals, and a believable, non-repeating soundscape for the audio. Most of the heavy lifting is done by the clips themselves and a lot of effort put into finding the most natural way to transition them. I believe this dream-like “space-telling” vs. story-telling is specifically well-suited for this generative film structure. While a lot of complexity and content was left on the cutting room floor, I felt like the end result was more focused, and I count this project as a success as it is the first time I felt like I was really “painting” with Max.

Seers

Walking Simulator Spring 2020

Seers was a interactive tone piece built in April 2020 as prologue to a wider story I have yet to return to. In a world which has gone grey during the heat death of the universe, the Seers pluck the now visible spirits and memories from the air to rebuild society.

Seers interacted with your filesystem picking and displaying photos from your machine as you build web-like structures across the landscape. Security roadblocks made this difficult to make more widely available at the time.

All programming, modelling, animation, and audio for this project was done solo.

wwww

Web Interfaces for Ableton Live Fall 2019

In the 1950’s, during the process of making the St. Lawerence river more traversable for large ships heading to the great lakes, a series of dams and locks were built. To deepen and widen the river, channels where cut into the river bed and a whole section of rapids called the Long Sault had to be flooded. Caught in the flooding was a set of towns now called the Lost Villages. The existance of these towns is now hidden because they have been completely submerged in the river, but they can be seen via satellite images. I stumbled across these images in the fall on a whim after I had a vague memory about my mom telling me this story when I was younger. The photos are striking as you can clearly see old roads and buildings underneath the water. What I found most impactful were the places I rememeber visiting as a child, where certain side roads abruptly vanished into the water. What I once thought to be a simple boat launch at the town beach is in reality a road that stretches the width of the river and crosses the border into Canada. I wanted to capture the sound I remember of swimming in the river, my head submerged, hearing the deep vibrations eminanating from the enourmous ocean-going ships all the way on the other side of the channel. I wanted to capture the feeling of routing through old photos, of dredging up clay from the river bed, of frantically trying to collect memories before they slip like water out of my head.

Technically I was exploring the posibilities of using web sctructures and interfaces for perforing musical pieces and their ability to engage a player with the thematic content of the piece more directly. Ultimately, there are better technologies for adding audio to websites, and for adding interactive visuals to live sets, but the fundamental ideas explored in this project make up the later foundation for Cosmolin.

wwww stands for world-wide web workstation. It also looks like a triangle wave.

Like a House Settling in the Night

Program Reflection Performance Fall 2019

Like a house settling in the night, was a project developed and performed entirely in Max's patching window using Max's built-in patcher "reflection" objects, allowing me to move objects around the window, make new connections and destroy objects.

At the time I was exploring ideas of memory, and how they become rewired, distorted and ultimately complete fabrications over time. The slow demolition of the patch itself reflects this. It's an idea I hope to return to at some point in the future.

Website

I’ve maintained a personal website for about 6 years at this point, generally dedicating time around the holidays and early/late summer to keeping it updated. When I was working in software development, this schedule sort of fell apart and I left it unmaintained for several years. Even when I was working on it biannually, there was usually some unnecessary heavy lifting that had to be done. Such is the constant shifting nature of web development that the discovery of a new tool prompted an overhaul of the sites architecture. In an effort to streamline the process of adding projects, I used increasingly complex static site generation stacks which I would promptly forget how use in the intervening college semesters and would have to spend several days getting back up to speed before I invariably became dissatisfied with the tools I was using and how I had designed the site several months before. On top of this, I rarely work in the same medium, and many projects require which a over-engineered build process tended to make difficult. I want this site to be a playground which facilitates more exploration with format.

To that end I have opted to build most of this site out with basic templating on a single page to make it easier to get to grips with when I sporadically return to update a project. Of course this also resists the urge when I return to break everything down.

I use gulp to facilitate html partials. I'd prefer that this site be fast, clean, and easily extensible for when I need to add new project. Becasue the nature of my work is varied, I've tried to not tie myself into any particulr layout.

This site is a largely chronological collection of works and loose thoughts, and upcoming events. I’d like to keep that dichotomy fuzzy, so tense is loose throughout all writing, sections on older projects are likely to remain at the bottom of the page, unless some relevant development makes them fundamentally a new project. I will make edits in the past tense.

With that said: the obligatory this-site-is-still-in-progress, and always will be; theres's a lot of text to go through.

About

I am Creative Developer and Sound Designer based in Chicago, with work ranging from professional software development to audio visual performance pieces to post-production audio. My primary personal work centers on exploring interactive audio-visuals systems, with my more recent work in games as frameworks for musical improvisation. I occasionally produce and perform music under my own name or as Protection Spells for more dance-oriented work. Live-projection and graphics work is often done under these monikers as well.

I studied Computer Science and music at Brown University in Providence Rhode Island, with additional coursework in Linguistics and Visual Art.

I did graduate studies in Sound for the Moving Image at Glasgow School of Art in Scotland.